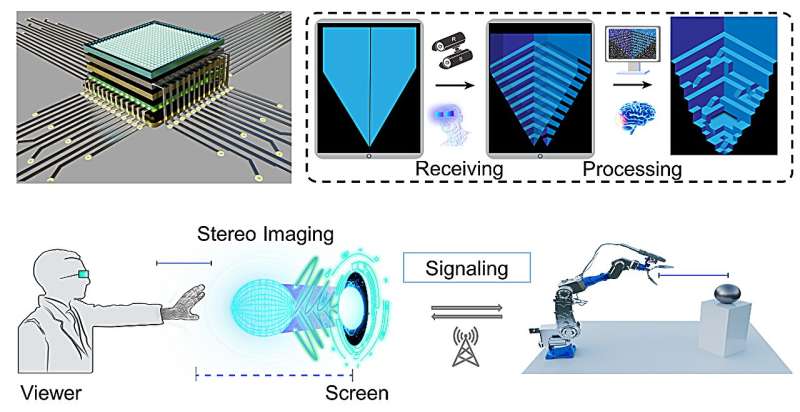

3D display system using circularly polarized luminescence, promising high-quality visuals, real-time interaction, and life-saving applications in robotics and rescue operations.

A team of scientists from the University of Science and Technology of China (USTC) has developed an innovative 3D display panel powered by circularly polarized luminescence (CPL) technology. The research is led by Professors Zhuang Taotao and Yu Shuhong and introduces a new class of adaptable, high-performance 3D displays.

These advanced CPL-based displays are designed to significantly enhance human-computer interaction by delivering stereoscopic images with improved clarity and reduced eye strain. Unlike traditional 3D systems, the new panels offer broader viewing angles and integrate easily with smart polarized glasses for seamless image depth perception.

A key feature of the technology is the orthogonal-CPL-emission display mechanism. It delivers parallax images to both eyes simultaneously, enabling real-time depth perception and interactive manipulation through hand gestures. This creates an immersive 3D experience based on binocular disparity—the brain’s ability to perceive depth by processing slightly different images from each eye.

To bring this to life, the team developed a fabrication method combining microelectronic printing with self-positioning capabilities. This approach led to the creation of CPL microdevices with a luminescence dissymmetry factor (glum) reaching 1.0—a benchmark for CPL performance—under alternating electric fields.

The practical potential was demonstrated in a simulated rescue scenario. Using the 3D display’s depth-sensing system, researchers remotely operated robotic arms to safely extract a trapped individual, minimizing risk to both victim and rescuer.

This development marks a significant step toward integrating virtual and real environments. With wide-ranging applications in industrial automation, medical electronics, scientific instrumentation, and even aerospace, the technology opens new doors for interactive and responsive smart systems.Reference:Qi Guo et al., Science Advances (2025). DOI: 10.1126/sciadv.adv2721